Building an Autonomous Vehicle from Scratch: A Journey Through Robotics, AI, and Real-World Engineering

PS: Jump ahead for the technical presentation 🙂 (The current article is still being updated , currently in the process of documenting and publishing my projects)

Preface

This project represents a year-long journey of curiosity turning into hands-on learning. What started as wondering how self-driving cars actually work became an exploration through hardware design, algorithms, computer vision, and the reality of making theoretical concepts work in practice.

The Problem

The challenge wasn’t just making something that moves and avoids obstacles. It was creating a system that could map unknown territories, plan paths, detect objects, and adapt to changes while maintaining the robust, modular architecture that real systems require.

How do you build a system that can run reliably, handle edge cases, and be extended with new capabilities?

I quickly learned that the well-known algorithms, A*, RRT*, Kalman filters, are just the beginning. The real engineering happens in integration: fusing sensor data reliably, designing communication protocols between microcontrollers, and debugging systems where hardware, software, and algorithms interact in complex and autonomous ways.

The Journey (Overview)

Genesis: Three Iterations of Learning

The project evolved through three distinct generations, each teaching me something fundamental about engineering:

Genesis Bot V1 was my first attempt at getting the hardware to work, using Ackermann steering (a normal RC car). However, integrating reliable sensors like encoders and IMU into this modification system proved challenging. RC cars weren’t intended to host these sensors or autonomous capabilities, making physical and software integration much harder than expected and unreliable. I tried magnetic encoders, 3D printed external holders, and various other approaches, but after weeks of overengineering and hacks, I realized it would be more efficient to restart with a more suitable chassis. It was a valuable lesson in the engineering process, and an expensive one.

Genesis Bot V2 moved to a mecanum wheel chassis. The integration of sensors, microcontrollers, and microprocessors (Arduino and RPi4) was much more straightforward. I finally had access to everything and could start real coding. Everything went as smoothly as you can expect with debugging, redesigning, and trusting the process. Eventually I had an early version that I could control, where the whole pipeline worked and I wrote the necessary nodes and bridges in ROS to communicate with the Arduino.

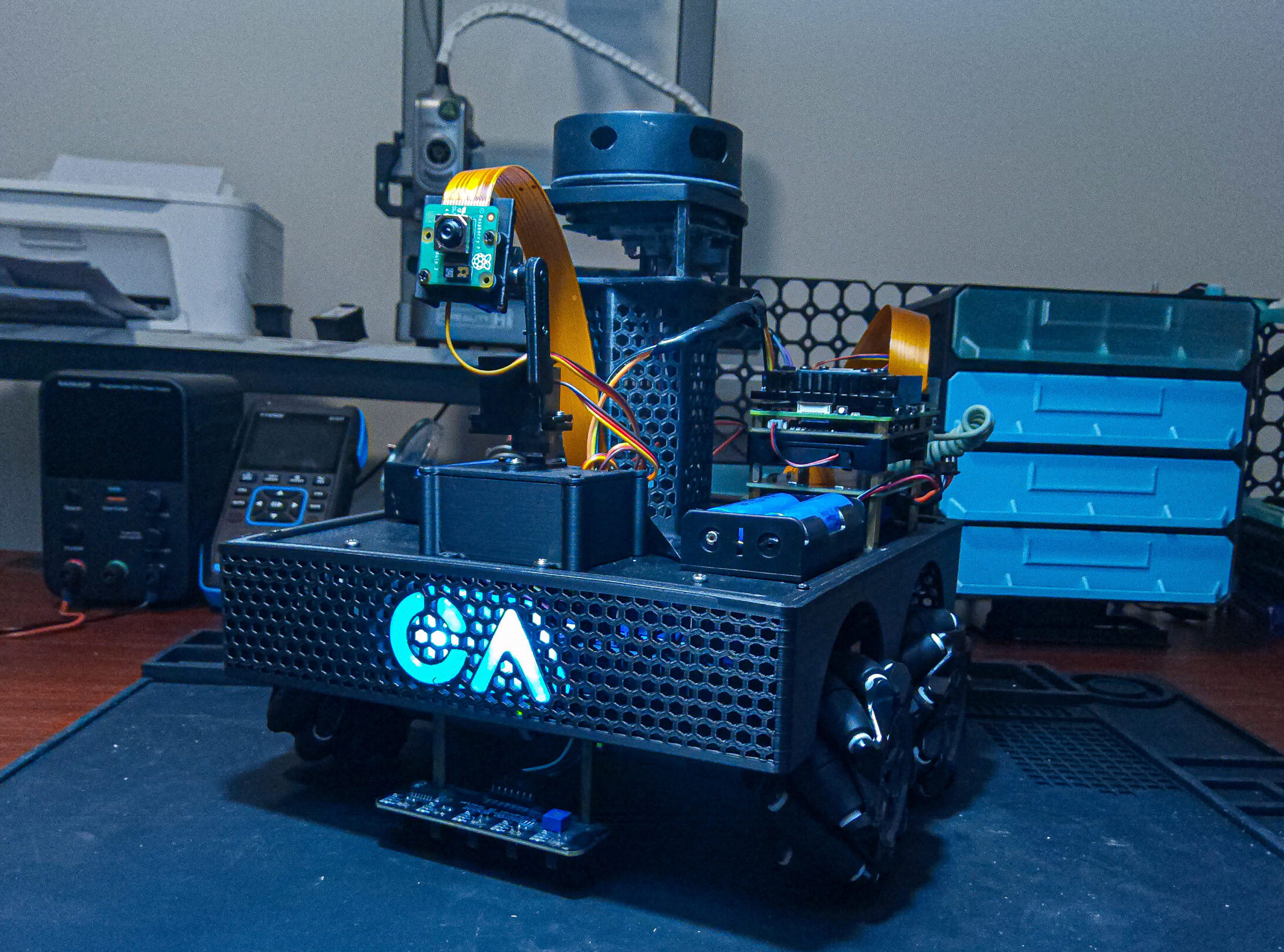

Genesis Bot V3 wasn’t a dramatic change like the first two versions, it just needed upgrades to computing capabilities. I changed the camera, went from Raspberry Pi 4 to 5, and upgraded from Arduino Mega to two ESP32s. I initially started with STM32, but my system had different physically separated logic (encoder reading, wheel control, IMU, and higher-level control). Using two ESP32s with the ESP-NOW communication protocol provided better separation of concerns, reduced cables, and allowed for cleaner assembly.

Technical Implementation

Autonomous Navigation

The navigation system uses a frontier exploration algorithm I implemented from scratch, the robot explores unknown environments by identifying boundaries between mapped and unmapped areas. The path planning combines A* for global planning (achieving solutions in under 0.5 seconds) with RRT* for dynamic obstacle avoidance. After careful algorithm tuning and handling edge cases, I achieved complete area coverage in mapping.

Computer Vision

The object detection pipeline uses OpenCV with custom algorithms for ball detection and pose estimation. The real challenge was making it work reliably under varying lighting conditions, different angles, and motion blur from a moving platform. The system uses HSV color space conversion with tuned thresholds, morphological operations for noise reduction, and geometric validation to distinguish real objects from false positives, then uses the intrinsic characteristics of the camera to estimate the position of the ball.

Low-Level Control

I implemented the control systems entirely in C/C++:

- PID-controlled wheel speeds with encoder feedback and anti-windup mechanisms

- Inverse kinematics for mecanum wheel control, translating robot motion into individual wheel commands

- Kalman filtering for sensor fusion, combining gyroscope and accelerometer data for heading estimation

- Safety systems with watchdog timers, connection monitoring, and emergency stops

Each system taught me about the gap between textbook formulas and working implementations, and how sensors are more like an idea of reality, with noise, false data, and so many edge cases. We need reliable algorithms and a lot of testing to get them to work reliably.

System Architecture

The final architecture spans multiple ESP32 microcontrollers handling specialized tasks:

- Command, Control & Sensor ESP32: Sensor fusion, inverse kinematics, and PID control (encoder, IMU)

- Motor Control ESP32: Real-time motor control with PID loops

This distributed approach uses ESP-NOW for low-latency communication, with ROS2 handling higher-level coordination.

We can easily switch the low-level and high-level components—the whole high-level system produces a velocity vector, and the low-level control does its best to execute the received velocity vector. The systems can be changed for others with the same input/output interface.

Technology Stack

The project integrates:

- Hardware: LIDAR, RGB camera, Raspberry Pi, IMU, wheel encoders, ESP32s

- Software: ROS2, Python, C++, OpenCV

- Algorithms: Custom A*, RRT*, Kalman filtering, frontier exploration, PID control

Real Lessons Learned

This project taught me that the path from “works in simulation” to “works in the real world” is longer and more challenging than any course prepared me for. But it’s also where the most interesting engineering problems live.

The robot can now autonomously map unknown environments, navigate to goals while avoiding obstacles, detect and locate objects, and adapt based on sensor feedback. Beyond the functionality, this project represents learning to think like a robotics engineer considering not just what the system should do, but how it should be built to do it reliably and extensibly.

Looking Forward

A lot of upgrades ahead! I’m pretty satisfied with my main path planning. I get reliable paths under 0.5s, but ball detection and frontier exploration, although they work, lack structure and I don’t think they’re reliable enough. I’ll implement better state machines and a system to keep updating the estimation of the ball based on new data.

Now for the exciting new features: This project continues to evolve, with plans for machine learning integration and more sophisticated perception systems. Each iteration teaches new lessons about the intersection of theoretical algorithms and practical engineering.

The autonomous vehicle industry is built on exactly these kinds of integrated systems challenges. This project gave me hands-on experience with the full stack, from low-level motor control to high-level path planning, that’s essential for contributing to the future of autonomous systems.

Technologies: ROS2, Python, C++, SLAM, A, RRT*, OpenCV, LIDAR, Raspberry Pi, ESP32, Kalman Filtering, PID Control, Computer Vision, Autonomous Navigation*

Technical Architecture

Distributed autonomous system integrating multiple ESP32 microcontrollers with ROS2 coordination

System Architecture

Genesis Bot V3 features a distributed architecture spanning multiple ESP32 microcontrollers, each handling specialized tasks with ESP-NOW for low-latency communication and ROS2 for higher-level coordination.

- Computing Platform Raspberry Pi 5

- Microcontrollers 2x ESP32

- Communication ESP-NOW + ROS2

- Sensors LIDAR, RGB Camera, IMU, Encoders

- Languages C++, Python

Core Algorithms

Three main algorithmic tasks enabling autonomous navigation and object detection

Autonomous Mapping

Frontier exploration algorithm for mapping unknown environments using LIDAR data and RRT* path planning.

- Frontier Detection: Identify boundaries between mapped/unmapped areas

- RRT* Path Planning: Build tree of collision-free paths

- Dynamic Goal Selection: Choose optimal exploration targets

- Complete Area Coverage: Achieve 100% mapping efficiency

Navigation with Obstacles

Hybrid navigation system combining A* global planning with RRT* dynamic obstacle avoidance.

- A* Global Planning: Generate optimal paths under 0.5s

- Laser Scan Processing: Real-time obstacle detection

- RRT* Replanning: Dynamic path adjustment around obstacles

- Path Merging: Seamless integration of global and local paths

Search & Localize

Computer vision pipeline for object detection and pose estimation using OpenCV and camera triangulation.

- HSV Color Thresholding: Robust color-based detection

- Morphological Processing: Noise reduction and validation

- Camera Triangulation: 3D position estimation

- Temporal Tracking: Consistent object identification

Performance Metrics

Early Testing of heading Control

Early testing of heading control using a Kalman filter with IMU data that uses sensor fusion algorithms showed promising results. I was able to successfully control and correct the heading of the robot, achieving improved stability and accurate directional tracking compared to using raw sensor data alone.

Version 2 Control Pipeline: Node Communication and Integration Testing

Testing of the control and pipeline system – this was Version 2, and we were testing the control logic and communication between the different nodes, as well as low-level and high-level integration. The focus was on validating data flow, message passing reliability, and ensuring seamless coordination between hardware drivers and application-level processes